Self-Organised Criticality (SOC)

During my ecology degree, whilst studying ecosystem and habitat change, I learned about Self-Organised Criticality (SOC), and I was fascinated by how it explained the precursors to seemingly dramatic changes. More recently, I’ve been pondering how the concept of SOC can be applied to organisational change as well as the frequency and severity of incidents and failures.

SOC illustrates how in certain complex systems, potentially very small local interactions and feedback loops can lead to large-scale, emergent behaviours like sudden population collapses or wildfires. A massive forest fire can occur if enough dry fuel has built up over a long period of time, or a large-scale change in plant species can occur because the soil has very gradually become more acidic. In these cases, seemingly tiny changes over time lead to sudden and unpredictable dramatic change, usually because some previously invisible (to us) threshold was crossed.

As well as ecosystems, SOC has been used to explain things like the dynamics of earthquakes and the stock market. SOC also provides insight into “power laws,” where large events are rarer than small events but follow consistent statistical relative patterns.

The Sandpile Model

SOC describes how certain complex systems (maybe all, though I’m not sure and I don’t think anyone else is!) naturally evolve over time into a critical state. Once a system is in this critical state, a minor disturbance can trigger a significant, cascading chain of events. This idea was introduced in the late 1980s by physicist Per Bak and his colleagues, who used a simple “sandpile” metaphor to illustrate it. SOC is often called “the sandpile model”, but the sandpile is actually a simplification intended to aid understanding.

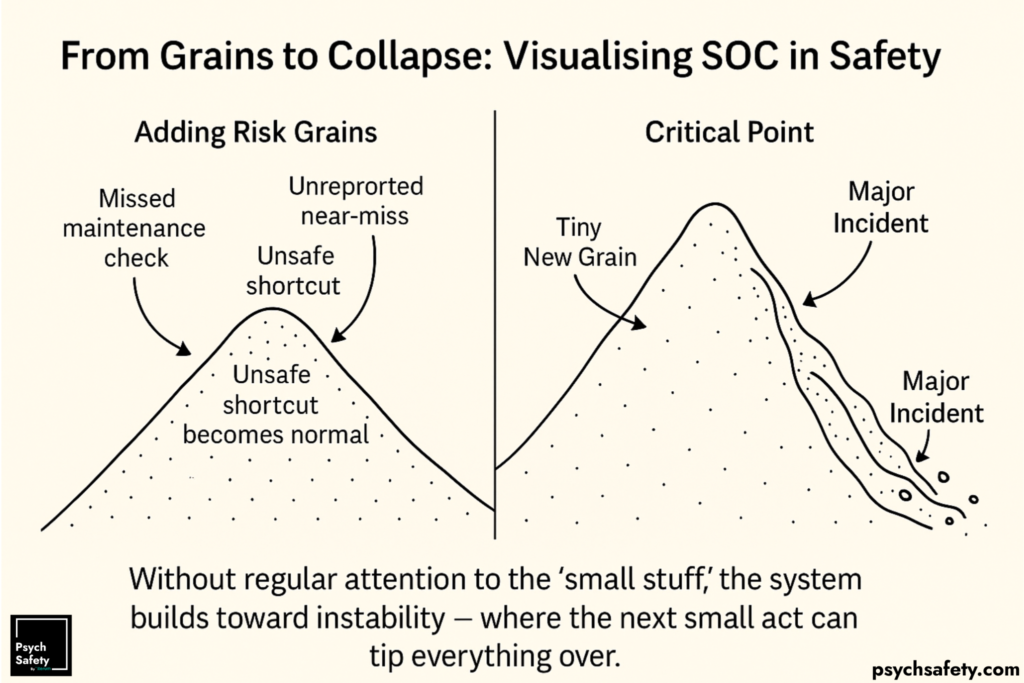

Bek’s now classic way to understand self-organised criticality or SOC is through the concept of grains of sand slowly dropped onto a flat surface:

- Small Changes: Grains of sand are steadily dropped one by one, onto a flat surface, in a random distribution. At first, we see various piles of sand grow steadily.

- Building Towards Criticality: As more grains are added, the sides of some piles get steeper.

- Small Avalanches: Eventually, when the slope on one of the piles gets too steep, dropping a single new grain causes a small avalanche of sand.

- Widespread Cascades: Over more time, the pile becomes organised around a critical slope. Now, a single grain can sometimes trigger a larger landslide. At other times, another grain may cause no noticeable collapse.

The point is that the system (the pile) may be balanced somewhere near the edge of a chain reaction – but it’s very hard, if not impossible, to know where that edge is prior to collapse. And in fact it’s not actually the grain of sand that caused the avalanche – it’s the shape of the pile. Here’s a great video by Sean Brady explaining it.

The sandpile model offers us a simplified way of understanding SOC – this balancing of the sandpile at a threshold between stability and avalanche. But it also illustrates some of the broader aspects of SOC as a classic model of complex systems. SOC tells us that a complex SOC system has these features:

- Critical state: A “critical” state is where the system is poised so that large fluctuations can occur from small changes – the single grain of sand triggering the avalanche.

- Self-organised: The system moves toward a critical condition without any external influence. This happens in natural systems such as ecosystems or planetary systems, but also things like economies and large organisations.

- Long-range effects: Due to interdependence, feedback loops and coupling, small events in one region can influence distant parts of the system.

- Cascading behaviour: Disturbances can cause chain reactions of many different sizes, from small changes to dramatic transformations.

This last point is in fact the hallmark of self-organised critical systems.

Safety-Organised Criticality (SOCy)

When reflecting on the characteristics of SOC systems, I noticed how similar the criteria are to those in Charles Perrow’s “Normal Accident” theory, which suggests that in certain complex and tightly coupled systems, accidents are inevitable – not necessarily due to human error or poor design, but because of the system’s very structure.

And I wondered how SOC could help frame the dynamics of health and safety, such as the occurrence of severe injuries and fatalities (SIFs), near-misses, and other incidents within organisations.

Data from the U.S. Bureau of Labor Statistics demonstrates that the frequency of accidents follows a power-law distribution with respect to the severity of those accidents (measured by days away from work). It shows that in general, reducing the occurrence of minor accidents can help suppress the entire distribution of accidents – thereby reducing the number of severe incidents. Other studies, such as this on high-casuality fires in China, show similar power-law patterns for size and severity. That power-law behaviour is a hallmark of SOC.

I think SOC helps explain how small or routine hazards can build up unnoticed and lead to large, unexpected, high-severity, events. Many safety models seem to reflect the dynamics of SOC, even if they don’t name it explicitly – perhaps because the term is still relatively unknown outside of ecology and complexity, though it’s already been used to describe the dynamics of security failures in aviation.

Here I’m playing with the idea of Safety-Organised Criticality (SOCy), drawing on the classic sandpile model of SOC. In that model, each grain of sand is a tiny addition that might settle harmlessly, or might trigger a landslide. Similarly, in safety management, each small hazard, unsafe act, or near-miss adds a “grain” of risk to the system.

On their own, these small hazards may seem insignificant. But together – through daily processes, equipment use, and behavioural norms – they can gradually push the overall system toward a critical state. Once there, a seemingly minor catalyst, such as small procedural deviation or overlooked warning sign, can trigger a major incident.

In SOC, small avalanches are frequent and large ones are rare – they both follow a power-law distribution. Applying that to safety:

- Near-Misses are like small avalanches. They happen more often, may go unreported or unaddressed, but should be seen as weak signals of accumulating risk.

- Major Incidents or SIFs (Serious Injury and Fatality events) are the large avalanches – rare but far more serious and appear to come ‘out of the blue’. But in SOC terms, they arise from the same underlying processes that produce smaller events.

Without intentional interventions, a SOC-like system naturally drifts toward the critical point. Ignoring near-misses or minor incidents can mean that we’re blind to the warning signs of growing systemic instability.

Drift into Failure and Latent Conditions

In safety, concepts such as Perrow’s “normal accidents”, Dekker’s “Drift into Failure”, the HOP red line, or Vaughan’s “normalisation of deviance” dovetail with SOC.

No one intentionally makes the system more fragile. Instead, it’s shaped by the realities of work: small workarounds to get the job done, minor rule-bending to meet production pressure and demands, or natural variations in how tasks are carried out by operators. These behaviours and actions by people within the system are adaptations that reflect the system’s self-organising nature, and can gradually steer it toward a critical state.

Over time, these small deviations from safe practices can become normalised. Since each one doesn’t lead to an immediate incident, it gets reinforced – “it was fine last time, so it’ll be fine next time.” But each act is another metaphorical grain of sand in the pile.

While we can’t predict exactly when the avalanche will occur, the risk grows as unaddressed issues accumulate. Eventually, everything aligns just right for a major accident – echoing James Reason’s Swiss Cheese Model (SCM), where multiple latent flaws line up. Like in SOC, it’s not a single failure, but the alignment of many small ones that allows disaster to unfold.

A common (though arguably misplaced) critique of Reason’s classic Swiss cheese model is that the holes – representing weaknesses in defence layers – are somewhat static. They don’t themselves shift or change in response to threats. In contrast, SOC explicitly shows us that the emergence of a threat can actually change the system itself: making that threat not only more likely, but also potentially more severe.

In this view, a hazard passing through one layer of defence can disrupt or weaken others – enlarging the holes, moving them, or even creating new ones. The system, under increasing strain, reaches a tipping point, and what follows is, in SOC terms, the avalanche: a cascading failure triggered by what might appear to be a minor or innocuous proximate cause.

Take a fire in a crowded concert venue. There will likely be multiple controls in place to prevent or manage the fire, multiple layers of defence. But what’s harder to predict is how people – especially if excited or inebriated – will respond. The fire itself might cause limited harm. But the resulting panic and crowd surge could escalate the situation into a far more devastating event.

This illustrates how SOC sees systems spontaneously converging toward a precarious state. No single action seems immediately risky, but over time they load the system toward an invisible threshold. And that threshold, that tipping point, becomes amplified by the interactions and interdependencies within the system, especially human (sociotechnical) ones.

SOC and Organisational Culture

What could SOC tell us in relation to organisational culture?

- Safety Culture: If near-misses are hidden through a lack of psychological safety, or near-misses are not taken seriously, the ‘grains’ of risk gradually accumulate, edging ever closer to criticality.

- Underlying Pressures: Production pressures, groupthink, incentives, under-resourcing, or complacency can quietly increase the “steepness” of the slope, making incidents more likely.

- Triggering Events: A small procedural mistake, deviation, or failure under high-consequence conditions might cascade into an avalanche: a major incident. But it would be wrong to think that this small event was the sole ‘cause’ of the incident.

The self-organised nature of the failure comes from how people at the sharp end of work, as well as supervisors and managers, constantly adapt work processes without explicit instruction to do so. Instead of blaming them, we must recognise that in many cases this is simply necessary to get the job done: without this adaptation, work would grind to a halt or outcomes may in fact be less safe. However, over time, these local adaptations as well as mistakes, slips and failures can inadvertently push the system to a precarious and unpredictable threshold.

And it might be worth exploring whether there are SOC-like dynamics at play in our organisations – some potential indicators would probably include:

- Frequent near-misses with low visibility

- Minor incidents or unsafe acts repeatedly occur, but they don’t trigger widespread attention or alarm.

- They may go unreported or are normalised as “how we do things around here”.

- Long periods of apparent stability

- The organisation appears stable and there are few recorded incidents. (See, for example, the Deepwater Horizon story, where the company went 7 years without a record of a Lost Time Incident)

- As a result, there is a tendency to believe that everything is fine, which leads to further complacency.

- Sudden high-impact events

- An accident occurs that seems disproportionate to its immediate trigger (“How could that cause this?”).

- Post-incident investigations often uncover a network of latent weak signals and risks that had been accumulating unseen, and in hindsight, may even seem obvious.

Long periods without major incidents can mask accumulating risk. In reality, the slope is getting steeper and the system is steadily accumulating risk, it just hasn’t “slid” yet.

So what can we do about it?

Applying SOC to safety highlights how incremental, everyday behaviour and process can accumulate into large-scale failures. Over time, organisations can “load” risk through routine operations, overlooked near-misses, and cultural assumptions that keep everyone comfortable and convinced the overall risk is low, until a seemingly minor trigger triggers a major avalanche.

And we need ways to probe and better understand what’s going on in seemingly stable periods of time in order to reveal and address any deviances and drifts before they cascade into something serious.

Regular safety audits, learning teams, debriefs, AARS, “safety culture pulses,” simulations, observations, and candid, psychologically safe conversations with those doing the work can all help.

Review of Key Elements of Safety-Organised Criticality:

- Near-misses are crucial warning signs; analogous to the small avalanches in SOC.

- Major incidents can arise from seemingly trivial triggers once the organisation is near a “critical state.”

- Psychological safety and organisational learning can mitigate the worst outcomes by continually preventing, catching, mitigating, and learning from small hazards before they cascade.

Viewing safety through an SOC lens may help remind us that no single event, action or change typically “causes” a major accident. Rather, because incidents follow power-law distributions, large incidents and SIFs emerge from a build-up of interdependent small factors, often each individually tolerable, but collectively leading the system to the point of criticality.

A note on SOC and Chaos

I’ve come across some conflation of “chaos” with SOC. This is understandable – both chaos theory and SOC deal with small changes that lead to large effects and often use similar concepts, such as power laws. But the underlying mechanisms of SOC and chaos are different. A self-organised critical system naturally moves towards a tipping point by means of its own continuous internal processes: the system is difficult to predict and demonstrates emergence – patterns that cannot be easily predicted or fully explained by examining the system’s individual components separately. Chaos (in the typically physical sense) is about extreme sensitivity to initial conditions, where minuscule differences in initial conditions grow exponentially such that it quickly becomes difficult, or impossible, to predict what the system does next. SOC is less about those initial conditions and more about a system that self-tunes to a tipping point through incremental changes and local feedback loops, making it prone to bursts of change.

While SOC and chaos share some broad similarities, one doesn’t imply the other.

Related newsletters:

Utilisation, slack, and resilience

Dr Richard Cook: How Complex Systems Fail

References

Bak P, Tang C, Wiesenfeld K (July 1987). “Self-organized criticality: An explanation of the 1/f noise”. Physical Review Letters. 59 (4): 381–384.

Bak, P., 1996. How nature works: the science of self-organized criticality. Springer Science & Business Media.

Banja, J., 2010. The normalization of deviance in healthcare delivery. Business horizons, 53(2), pp.139-148.

Clark, D., Lawton, R., Baxter, R., Sheard, L. and O’Hara, J.K., 2025. Do healthcare professionals work around safety standards, and should we be worried? A scoping review. BMJ Quality & Safety, 34(5), pp.317-329.

Frigg, R., 2003. Self-organised criticality—what it is and what it isn’t. Studies in History and Philosophy of Science Part A, 34(3), pp.613-632.

Gell-Mann, M., 1995. What is Complexity? Remarks on simpicity and complexity by the Nobel Prizewinning author of The Quark and the Jaguar. Complexity, 1. https://complexity.martinsewell.com/Gell95.pdf

M Ghil , P Yiou , S Hallegatte , B D Malamud , P Naveau , A Soloviev , P Friederichs , V Keilis-Borok , D Kondrashov , V Kossobokov , O Mestre , C Nicolis , H W Rust , P Shebalin , M Vrac , A Witt , I Zaliapin. 2011. Extreme events: dynamics, statistics and prediction, Nonlinear Processes in Geophysics , volume 18 , p. 295 – 350

Kahol, K., Vankipuram, M., Patel, V.L. and Smith, M.L., 2011. Deviations from protocol in a complex trauma environment: errors or innovations?. Journal of biomedical informatics, 44(3), pp.425-431.

S Lu , C Liang , W Song , H Zhang, 2013. Frequency-size distribution and time-scaling property of high-casualty fires in China: Analysis and comparison Safety Science , volume 51 , p. 209 – 216 Posted: 2013

Malamud, B.D., Morein, G. and Turcotte, D.L., 1998. Forest fires: an example of self-organized critical behavior. Science, 281(5384), pp.1840-1842.

Mauro, J.C., Diehl, B., Marcellin Jr, R.F. and Vaughn, D.J., 2018. Workplace accidents and self-organized criticality. Physica A: Statistical Mechanics and its Applications, 506, pp.284-289.

McFarlane, P., 2023. A new inter-disciplinary relationship: introducing self-organized criticality to failures in aviation security. Journal of Transportation Security, 16(1), p.12.

Pruessner, G., 2012. Self-organised criticality: theory, models and characterisation. Cambridge University Press. https://assets.cambridge.org/97805218/53354/frontmatter/9780521853354_frontmatter.pdf

Sawhill, B.K., 1993. Self-organized criticality and complexity theory. Nadel L., Stein DL (eds).

Smalley Jr, R.F., Turcotte, D.L. and Solla, S.A., 1985. A renormalization group approach to the stick‐slip behavior of faults. Journal of Geophysical Research: Solid Earth, 90(B2), pp.1894-1900.

Sornette, D., Johansen, A. and Bouchaud, J.P., 1996. Stock market crashes, precursors and replicas. Journal de Physique I, 6(1), pp.167-175.

Zhou, F., Liu, X. and Wang, F., Size-Frequency Distribution Characteristic of Fatalities in Safety Accidents and its Industry Dependency. Available at SSRN 4697534.

Psychological Safety in Practice

Communities of Practice

We often talk about introducing psychological safety Communities of Practice with our clients, in order to help build and maintain momentum in psychological safety practice, and embed continuous shared learning across an organisation. Here’s a great rundown and illustration of the CoP idea and associated terms in the Complex Systems Frameworks Collection of Simon Fraser University.

A Safe Place to Stammer

I absolutely love this idea by Dental Nurse Becca Jones, who wanted to make sure that people coming to her dental practice knew they could stammer without being rushed or have their sentences finished for them. It’s a great way of reducing the anxiety of speaking, particularly if you have a speech difference.

“…for many of us who stammer, stammering itself isn’t what we fear most. The real anxiety lies in the uncertainty — how will others react? Will they laugh? Interrupt us? Ask if we’ve forgotten our name? Will they dismiss us with awkward jokes? The list, unfortunately, goes on. We often walk into spaces without knowing whether we’re entering a safe and understanding environment. Are the staff trained to recognise and support people who stammer, or have they simply never encountered it before? A huge thing for me when getting nervous going to places, is the not knowing how I’m going to be treated. It can be terrifying.”

From ‘Comfort-Seeking’ to ‘Problem-Sensing’

Thanks to Kevin Somerton in the psychological safety community for sharing this powerful piece by James Titcombe on culture and failures in the NHS, and suggests ways forward. As Kevin says, it’s “strong on psychological safety, without mentioning psychological safety” and describes “a culture where the primary concern is often not “what might be going wrong?” but “how can we show that everything is okay?”“

The post Safety-Organised Criticality appeared first on Psych Safety.